How To Run FEDS on DPS

We use the MAAP Data Processing System (DPS) as a batch computing resource for processing large archival runs in addition to using it as part of the NRT system.

A given FEDS run generates outputs for a user-defined bounding box (region) and date range, generated with certain settings and a particular version of the FEDS codebase. When FEDS is run on DPS, it reads input data from our shared S3 bucket and copies outputs back to that bucket.

This doc explains how to define a new region (e.g. CONUS, RussiaEast, etc.) to run FEDS on, how to customize which settings it uses, how to run a region manually using the manual v3 workflow, and how to create a new scheduled job to continue updating your new region in NRT if neccessary.

Defining a New Region

The example below will use the newly created “RussiaEast” region to explain how to define a new region and process it on DPS.

Pick a unique, descriptive region name that does not already exist in

FEDSpreprocessed(e.g. “Place Example”)Create a .env file defining the settings you want to use for your region. The meanings of and options for each setting are defined in

FireConsts.py:Settings. Here is an example .env file:FEDS_FTYP_OPT="global" FEDS_CONT_OPT="global" FEDS_EPSG_CODE=6933 FEDS_FIRE_NRT="False" FEDS_FIRE_SOURCE="VIIRS" FEDS_remove_static_sources="True"TipWorking with hidden files (prefixed by a

.) is difficult in the MAAP ADE, because the GUI file explorer has no way to show them. Use the terminal instead. Another thing you can do is create a file calledenv, edit it with the GUI as needed, then rename it to.envwhen you are done.# one way to create a .env file directly from the terminal (pangeo) root@workspaceqhqrmmz1pim87fsz:~# touch .env (pangeo) root@workspaceqhqrmmz1pim87fsz:~# echo -e "FEDS_FTYP_OPT="global"\nFEDS_CONT_OPT="global"\nFEDS_EPSG_CODE=6933\nFEDS_FIRE_NRT="False"\nFEDS_FIRE_SOURCE="VIIRS"\nFEDS_remove_static_sources="True"" >> .env # once we have created a .env file locally, inspect it (pangeo) root@workspaceqhqrmmz1pim87fsz:~# cat .env FEDS_FTYP_OPT="global" FEDS_CONT_OPT="global" FEDS_EPSG_CODE=6933 FEDS_FIRE_NRT="False" FEDS_FIRE_SOURCE="VIIRS" FEDS_remove_static_sources="True"Copy the

.envfile toSettings.PREPROCESSED_DIR/${regnm}:# check that region does not already exist # (just showing how to list bucket key contents) (pangeo) root@workspaceqhqrmmz1pim87fsz:~# aws s3 ls s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed/ PRE BorealManualV2/ PRE BorealNA/ PRE CONUS/ # copy the .env to a new FEDSpreprocessed folder (pangeo) root@workspaceqhqrmmz1pim87fsz:~# aws s3 cp /projects/.env s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed/PlaceExample/.env upload: ./.env to s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed/PlaceExample/.env # check that it exists (pangeo) root@workspaceqhqrmmz1pim87fsz:~# aws s3 ls s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed/PlaceExample/ 2024-07-12 05:55:19 66 .envNoteWhen working with S3, we do not need to explicitly create a folder for the new region. Instead, when we copy the

.envfile toSettings.PREPROCESSED_DIR/${rgnm}/.env, S3 infers from the pathname that it should create the new folder automatically.

Running Manually

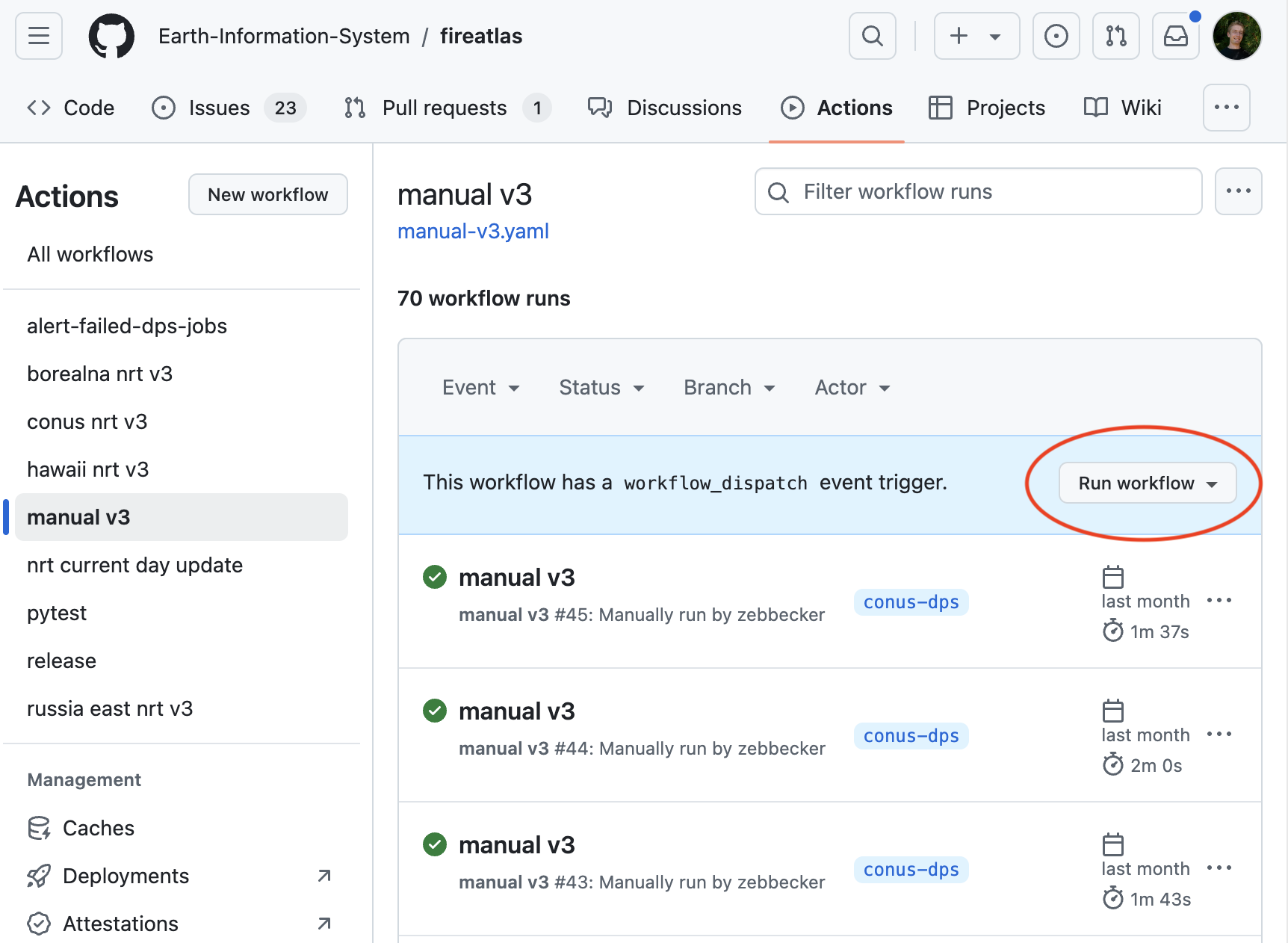

Now that we have defined a new region, we can manually trigger a run on DPS for a certain time period for that region with the GitHub Action “manual v3” workflow.

From the main GitHub repo, navigate to the workflow page for the manual v3 workflow. In the upper right hand corner, select “Run Workflow.”

Fill out the input parameters according to the descriptions in the prompt. The most important thing to note is that you should NOT pass the JSON encoded parameter input enclosed in single or double quotes, but rather naked. It should look something like this:

{"regnm": "PlaceExample", "bbox": "[97.16172779881556,46.1226744036175,168.70469654881543,77.81982396998427]", "tst": "[2024,5,1,\"AM\"]", "ted": "[2024,6,1,\"PM\"]", "operation": "--coordinate-all"}The action will submit your job to DPS. When the action completes, this does not mean that your job is done; rather, it means that it was successfully submitted for processing. You can use the MAAP ADE’s Jobs UI to monitor the progress of your job once it has been submitted. Outputs will be copied from the DPS worker to Settings.OUTPUT_DIR/${regnm} once the job is complete.

The MAAP DPS currently has a 24 hour time limit on jobs. When running on very large regions, you may need to split your job up into smaller time periods in order to avoid this. For example, if you are running over all of CONUS, you should do it in 3 month increments, as these can typically be completed within 24 hours, but an entire year cannot.

The preceding job must be completed before the next job in the sequence is started so that the computation can pick up where it left off in the last job.

Scheduling NRT Runs For Your New Region

Once you have tested your job manually, you can schedule it to run at constant intervals in order to update the outputs as new data is collected in NRT.

Find an existing v3 scheduled worklow in the

.github/workflows/*.yamlsuch as.github/workflows/schedule-conus-nrt-v3.yamland copy itcp .github/workflows/schedule-conus-nrt-v3.yaml .github/workflows/schedule-place-example-nrt-v3.yamlEdit the new workflow and make sure to change some of the following sections and values. Note that leaving

tstortedas[]means it will run from current year 01/01 to now:name: <your region name> nrt v3 ... - name: kick off the DPS job uses: Earth-Information-System/fireatlas/.github/actions/run-dps-job-v3@conus-dps with: algo_name: eis-feds-dask-coordinator-v3 github_ref: 1.2.3 username: gcorradini queue: maap-dps-eis-worker-128gb maap_image_env: ubuntu maap_pgt_secret: ${{ secrets.MAAP_PGT }} json_params: '{"regnm": "RussiaEast", "bbox": "[97.16172779881556, 46.1226744036175, 168.70469654881543, 77.81982396998427]", "tst": "[2024,5,1,\"AM\"]", "ted": "[]", "operation": "--coordinate-all"}'Make sure to add the username you use to register the new scheduled job to the

maap_runtime/alert-on-failed-dps-jobs.pyscript if you want the alerting system to warn us when your scheduled job fails after being submitted to DPS.

Running Different FEDS Versions on DPS

By default, the production NRT system runs the latest tagged FEDS version, as detailed in the docs on releasing. However, you aren’t limited to using only that particular version of the codebase. You can build a DPS image that uses any version of the codebase you like, and run it as show above. In this section, we will work through an example scenario in which we need to test experimental code on DPS without immediately releasing it into production.

- Create a new branch.

Note that in this specific case, unlike in the rest of the project, the branch name cannot contain the / character, as this interferes with the MAAP image build later on. So, instead of naming it zb/feature-branch, we will just name it feature-branch.

- In that branch, make whatever changes are needed.

- Push changes to your branch on GitHub.

- With your branch checked out, edit

maap_runtime/coordinator/algorithm_config.yaml:- Give it a new algorithm name that is different from the production name. For example,

TEST-FEATURE-eis-feds-dask-coordinator-v3. - For

algorithm_version, where it might currently say something like1.2.3, put the name of your branch instead:feature-branchin this case.

- Give it a new algorithm name that is different from the production name. For example,

- Run through the

maap_runtime/register_all.ipynbnotebook to register the new algorithm you just created. This will cause DPS to:- Checkout your branch from remote

- Build a new DPS image that has the code from your branch installed on it

- Return a log response including a

job_web_url, which you can use to watch the image build in GitLab CI/CD and troubleshoot any problems

- Once the image has built successfully, it will be available on DPS. You can then use it via the

manual v3action on GitHub Actions as described above. However, a few changes are needed:- Use the workflow from your feature branch, not the main branch.

- Make sure to input the changed algorithm name you created in

algorithm_config.yaml. - Input your branch name instead of the latest version number in the “Branch name or version tag” field.

How DPS Jobs Pick Up Custom Settings For Your Region

There are several settings, such as EPSG code, that need to be changed depending on the region for which we are running FEDS. The default settings are those stored in FireConsts.py:Settings. Most custom regions will require some changes to these default settings.

The settings used to run a given region regnm on DPS are defined in a .env file stored in the Settings.PREPROCESSED_DIR/${regnm} folder. If there is no such file here, the default settings are used.

Settings.PREPROCESSED_DIR points to s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed in our current production setup. You can see existing regions there.

A couple points will describe how this all works:

We use the package pydantic-settings in the fireatlas code to manage our settings. If you look at the class declaration in

FireConsts.pyyou’ll see this piece of config below. This tells us a few different things:class Settings(BaseSettings): # read in all env vars prefixed with `FEDS_` they can be in a .env file model_config = { "env_file": ".env", "extra": "ignore", "env_prefix": "FEDS_", }- if there is a

.envfile available read it and use the key/values there to override any defaults listed in this class - if there are variables in the

.envfile that are not declared in this class do not use them - everything in the

.envthat will act as an override is prefixed withFEDS_(so to overrideLOCAL_PATHin the.envyou would declareFEDS_LOCAL_PATH=<value>)

- if there is a

All DPS jobs are kicked off via the

/maap_runtime/run_dps_cli.shscript. In that file there’s a single function and line that attempts (and gracefully exists) to bring over the.envfile for a DPS run:# TODO: this will have to be changed to be passed dynamically once we want to use other s3 buckets copy_s3_object "s3://maap-ops-workspace/shared/gsfc_landslides/FEDSpreprocessed/${regnm}/.env" ../fireatlas/.env

Any imports of the fireatlas package should now have these overrides included in calls to python FireRunDaskCoordinator.py.